Actual Causation

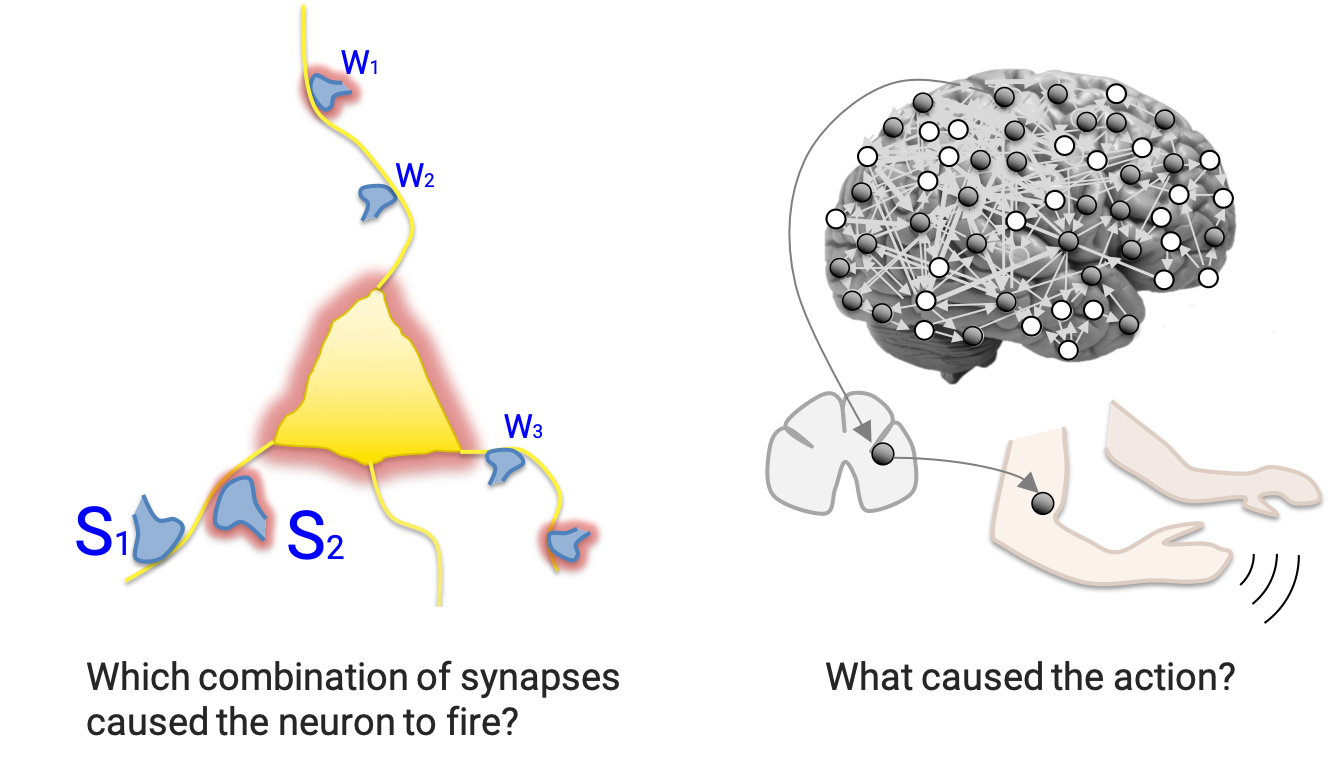

Actual causation is concerned with the question: “What caused what?” Consider a transition between two states within a system of interacting elements, such as an artificial neural network, or a biological brain circuit. Which combination of synapses caused the neuron to fire? Which image features caused the classifier to misinterpret the picture? Even detailed knowledge of the system’s causal network, its elements, their states, connectivity, and dynamics does not automatically provide a straightforward answer to the “what caused what?” question.

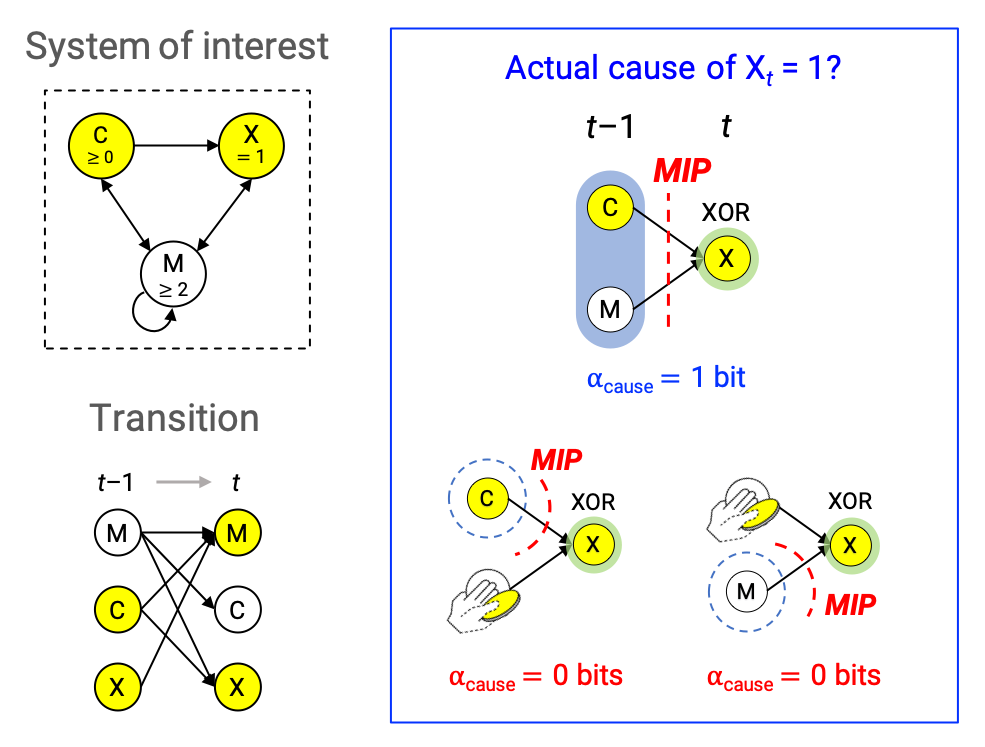

To address this question in a principled manner, we have developed a formal account of actual causation based on the five causal principles of integrated information theory (IIT)—namely, existence (here: realization), composition, information, integration, and exclusion. The formalism is generally applicable to discrete Markovian dynamical systems constituted of interacting elements and naturally extends from deterministic to probabilistic causal networks, and also from binary to multi-valued variables. Finally, it allows us to quantify the causal strength between an occurrence and its cause or effect in informational terms (Albantakis et al. 2019).

\[ \alpha_{\textrm{cause}}(x_{t-1}, \; y_t) = \log_2 \left( \frac{\pi(x_{t-1} \mid y_t)}{\pi(x_{t-1} \mid y_t)_{\textrm{MIP}}} \right) \]

As shown in Juel et al. 2019, we can, moreover, use the Actual Causation framework to trace the causes of an action back in time (“causes of causes”) and evaluate the spatial and temporal extent to which internal mechanisms and states contributed to the actual causes of an agent’s actions, as opposed to being driven by its sensory inputs.

References

- Albantakis L, Marshall W, Hoel E, Tononi G (2019). What caused what? A quantitative account of actual causation using dynamical causal networks. Entropy, 21 (5), pp. 459.

- Juel BE, Comolatti R, Tononi G, Albantakis L (2019). When is an action caused from within? Quantifying the causal chain leading to actions in simulated agents. arXiv preprint arXiv:1904.02995.

Acknowledgements

This demo was made possible by funding from an FQXi Mini Grant (FQXi-MGB-1810) and through the support of a grant from Templeton World Charity Foundation, Inc. (TWCF0196).